Instagram this morning announced several changes to its moderation policy, the most significant of which is that it will now warn users if their account could become disabled before that actually takes place. This change goes to address a longstanding issue where users would launch Instagram only to find that their account had been shut down without any warning.

While it’s one thing for Instagram to disable accounts for violating its stated guidelines, the service’s automated systems haven’t always gotten things right. The company has come under fire before for banning innocuous photos, like those of mothers breastfeeding their children, for example, or art. (Or, you know, Madonna.)

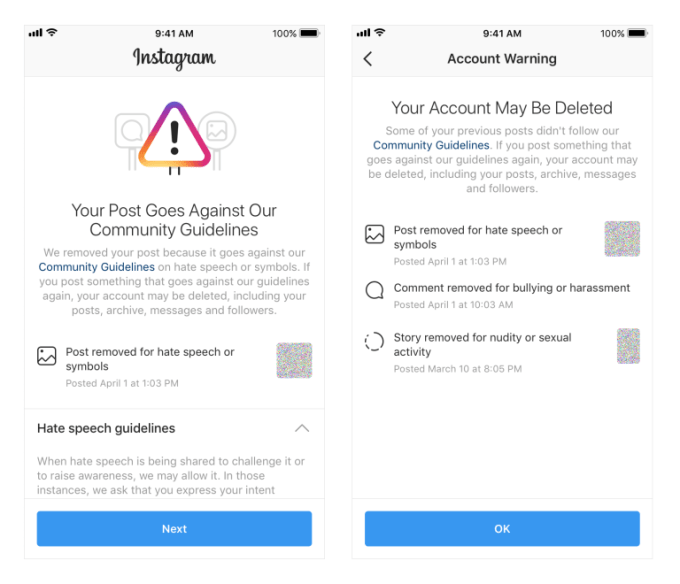

Now the company says it will introduce a new notification process that will warn users if their account is at risk of becoming disabled. The notification will also allow them to appeal the deleted content in some cases.

For now, users will be able to appeal moderation decisions around Instagram’s nudity and pornography policies, as well as its bullying and harassment, hate speech, drug sales and counter-terrorism policies. Over time, Instagram will expand the appeal capabilities to more categories.

The change means users won’t be caught off guard by Instagram’s enforcement actions. Plus, they’ll be given a chance to appeal a decision directly in the app, instead of only through the Help Center as before.

In addition, Instagram says it will increase its enforcement of bad actors.

Previously, it could remove accounts that had a certain percentage of content in violation of its policies. But now it also will be able to remove accounts that have a certain number of violations within a window of time.

“Similarly to how policies are enforced on Facebook, this change will allow us to enforce our policies more consistently and hold people accountable for what they post on Instagram,” the company says in its announcement.

The changes follow a recent threat of a class-action lawsuit against the photo-sharing network led by the Adult Performers Actors Guild. The organization claimed Instagram was banning the adult performers’ accounts, even when there was no nudity being shown.

“It appears that the accounts were terminated merely because of their status as an adult performer,” James Felton, the Adult Performers Actors Guild legal counsel, told The Guardian in June. “Efforts to learn the reasons behind the termination have been futile,” he said, adding that the Guild was considering legal action.

The Electronic Frontier Foundation (EFF) also this year launched an anti-censorship campaign, TOSSed Out, which aimed to highlight how social media companies unevenly enforce their terms of service. As part of its efforts, the EFF examined the content moderation policies of 16 platforms and app stores, including Facebook, Twitter, the Apple App Store and Instagram.

It found that only four companies — Facebook, Reddit, Apple and GitHub — had committed to actually informing users when their content was censored as to which community guideline violation or legal request had led to that action.

“Providing an appeals process is great for users, but its utility is undermined by the fact that users can’t count on companies to tell them when or why their content is taken down,” said Gennie Gebhart, EFF associate director of research, at the time of the report. “Notifying people when their content has been removed or censored is a challenge when your users number in the millions or billions, but social media platforms should be making investments to provide meaningful notice.”

Instagram’s policy change focused on cracking down on repeat offenders is rolling out now, while the ability to appeal decisions directly within the app will arrive in the coming months.