Microsoft’s Seeing AI is an app that lets blind and limited-vision folks convert visual data into audio feedback, and it just got a useful new feature. Users can now use touch to explore the objects and people in photos.

It’s powered by machine learning, of course, specifically object and scene recognition. All you need to do is take a photo or open one up in the viewer and tap anywhere on it.

“This new feature enables users to tap their finger to an image on a touch-screen to hear a description of objects within an image and the spatial relationship between them,” wrote Seeing AI lead Saqib Shaikh in a blog post. “The app can even describe the physical appearance of people and predict their mood.”

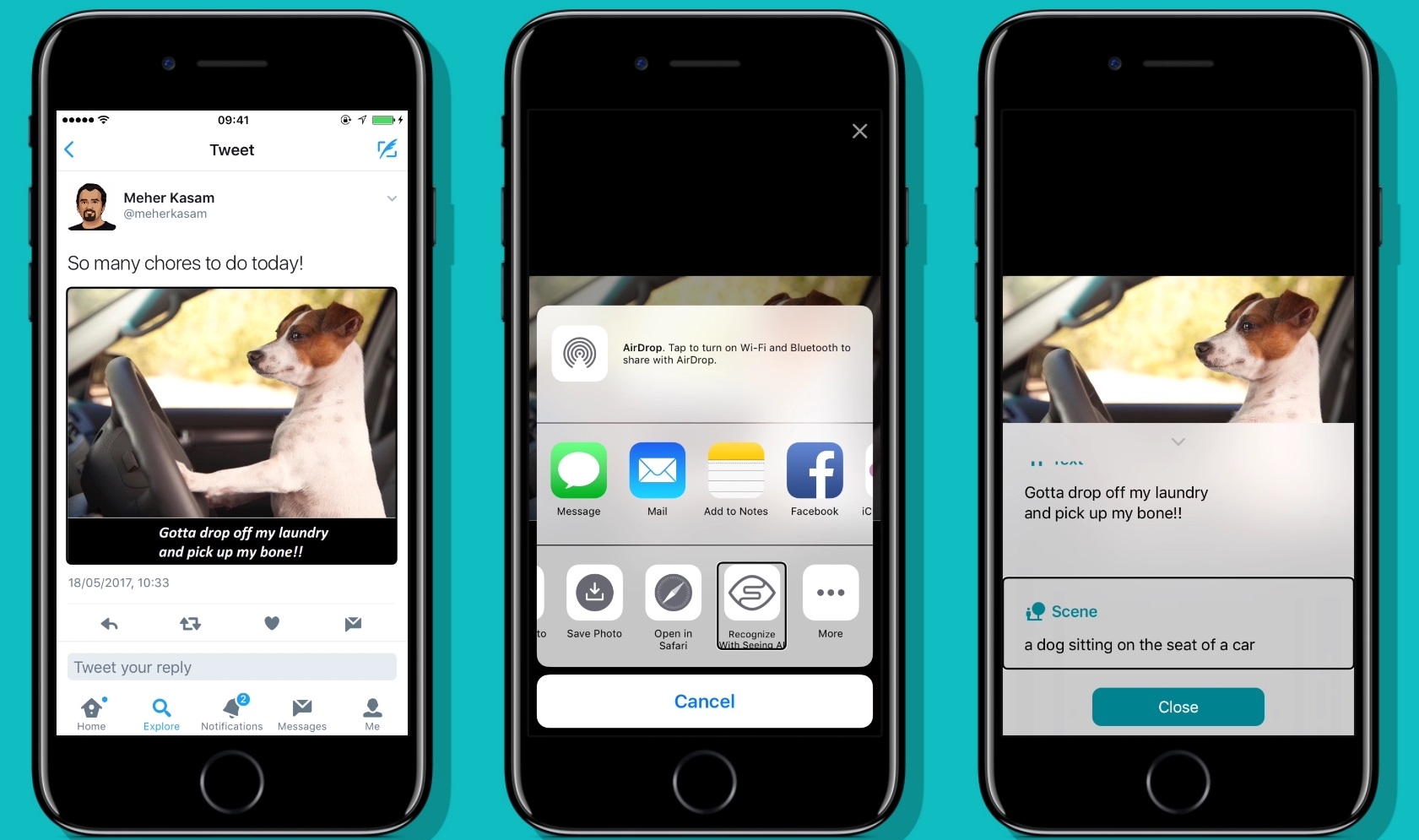

Because there’s facial recognition built in as well, you could very well take a picture of your friends and hear who’s doing what and where, and whether there’s a dog in the picture (important) and so on. This was possible on an image-wide scale already, as you can see in this image:

But the app now lets users tap around to find where objects are — obviously important to understanding the picture or recognizing it from before. Other details that may not have made it into the overall description may also appear on closer inspection, such as flowers in the foreground or a movie poster in the background.

But the app now lets users tap around to find where objects are — obviously important to understanding the picture or recognizing it from before. Other details that may not have made it into the overall description may also appear on closer inspection, such as flowers in the foreground or a movie poster in the background.

In addition to this, the app now natively supports the iPad, which is certainly going to be nice for the many people who use Apple’s tablets as their primary interface for media and interactions. Lastly, there are a few improvements to the interface so users can order things in the app to their preference.

Seeing AI is free — you can download it for iOS devices here.