Light is the fastest thing in the universe, so trying to catch it on the move is necessarily something of a challenge. We’ve had some success, but a new rig built by Caltech scientists pulls down a mind-boggling 10 trillion frames per second, meaning it can capture light as it travels along — and they have plans to make it a hundred times faster.

Understanding how light moves is fundamental to many fields, so it isn’t just idle curiosity driving the efforts of Jinyang Liang and his colleagues — not that there’d be anything wrong with that either. But there are potential applications in physics, engineering, and medicine that depend heavily on the behavior of light at scales so small, and so short, that they are at the very limit of what can be measured.

You may have heard about billion- and trillion-FPS cameras in the past, but those were likely “streak cameras” that do a bit of cheating to achieve those numbers.

If a pulse of light can be replicated perfectly, then you could send one every millisecond but offset the camera’s capture time by an even smaller fraction, like a handful of femtoseconds (a billion times shorter). You’d capture one pulse when it was here, the next one when it was a little further, the next one when it was even further, and so on. The end result is a movie that’s indistinguishable in many ways from if you’d captured that first pulse at high speed.

This is highly effective — but you can’t always count on being able to produce a pulse of light a million times the exact same way. Perhaps you need to see what happens when it passes through a carefully engineered laser-etched lens that will be altered by the first pulse that strikes it. In cases like that, you need to capture that first pulse in real time — which means recording images not just with femtosecond precision, but only femtoseconds apart.

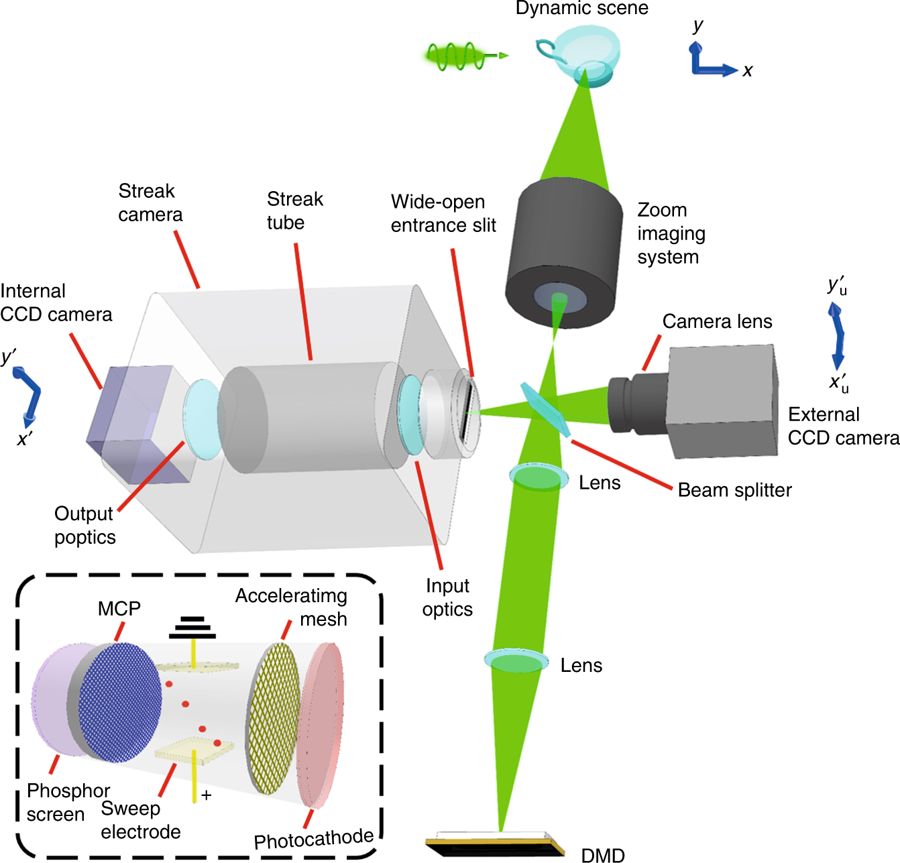

That’s what the T-CUP method does. It combines a streak camera with a second static camera and a data collection method used in tomography.

“We knew that by using only a femtosecond streak camera, the image quality would be limited. So to improve this, we added another camera that acquires a static image. Combined with the image acquired by the femtosecond streak camera, we can use what is called a Radon transformation to obtain high-quality images while recording ten trillion frames per second,” explained co-author of the study Lihong Wang. That clears things right up!

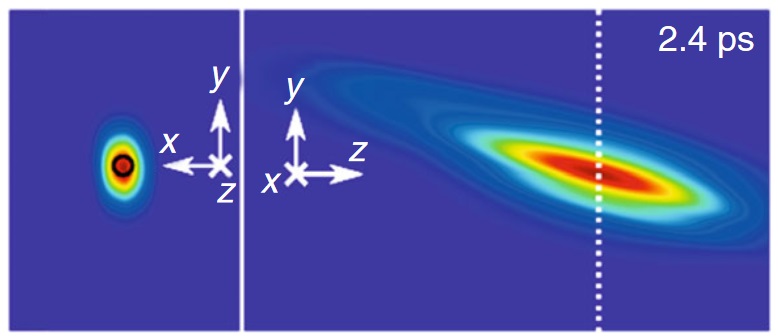

At any rate the method allows for images — well, technically spatiotemporal datacubes — to be captured just 100 femtoseconds apart. That’s ten trillion per second, or it would be if they wanted to run it for that long, but there’s no storage array fast enough to write ten trillion datacubes per second to. So they can only keep it running for a handful of frames in a row for now — 25 during the experiment you see visualized here.

Those 25 frames show a femtosecond-long laser pulse passing through a beam splitter — note how at this scale the time it takes for the light to pass through the lens itself is nontrivial. You have to take this stuff into account!

This level of precision in real time is unprecedented, but the team isn’t done yet.

“We already see possibilities for increasing the speed to up to one quadrillion (1015) frames per second!” enthused Liang in the press release. Capturing the behavior of light at that scale and with this level of fidelity is leagues beyond what we were capable of just a few years ago and may open up entire new fields or lines of inquiry in physics and exotic materials.

Liang et al.’s paper appeared today in the journal Light.